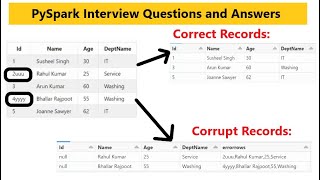

11. How to handle corrupt records in pyspark | How to load Bad Data in error file pyspark | #pyspark

Similar Tracks

12. how partition works internally in PySpark | partition by pyspark interview q & a | #pyspark

SS UNITECH

10. How to load only correct records in pyspark | How to Handle Bad Data in pyspark #pyspark

SS UNITECH

Pyspark Scenarios 18 : How to Handle Bad Data in pyspark dataframe using pyspark schema #pyspark

TechLake

6. what is data skew in pyspark | pyspark interview questions & answers | databricks interview q & a

SS UNITECH

Data Caching in Apache Spark | Optimizing performance using Caching | When and when not to cache

Learning Journal

22 Optimize Joins in Spark & Understand Bucketing for Faster joins |Sort Merge Join |Broad Cast Join

Ease With Data

Explained on how to create Jobs & Different Types of Tasks in in Databricks Workflow

LetsLearnWithChinnoVino

24 Auto Loader in Databricks | AutoLoader Schema Evolution Modes | File Detection Mode in AutoLoader

Ease With Data

Pyspark Scenarios 5 : how read all files from nested folder in pySpark dataframe #pyspark #spark

TechLake

24 Fix Skewness and Spillage with Salting in Spark | Salting Technique | How to identify Skewness

Ease With Data