Similar Tracks

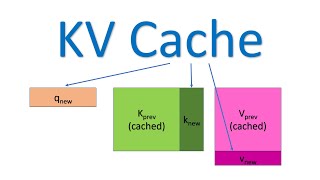

How to make LLMs fast: KV Caching, Speculative Decoding, and Multi-Query Attention | Cursor Team

Lex Clips

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

Umar Jamil