Similar Tracks

Efficiently Modeling Long Sequences with Structured State Spaces - Albert Gu | Stanford MLSys #46

Stanford MLSys Seminars

Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters (Paper)

Yannic Kilcher

Mamba, Mamba-2 and Post-Transformer Architectures for Generative AI with Albert Gu - 693

The TWIML AI Podcast with Sam Charrington

TokenFormer: Rethinking Transformer Scaling with Tokenized Model Parameters (Paper Explained)

Yannic Kilcher

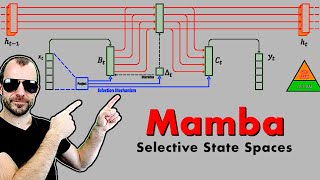

Mamba and S4 Explained: Architecture, Parallel Scan, Kernel Fusion, Recurrent, Convolution, Math

Umar Jamil

Kolmogorov-Arnold Networks (KANs): Redefining Neural Nets? | MLBBQ | Theodore LaGrow

Understanding Machine Learning